F2: From the Big Bang to the future Universe

> Read the articles connected to the project.

-

Cosmology is the study of the Universe in its largest dimensions and of its evolution. In the successful big bang model, the early Universe is hot and dense and cools down as it expands. The question of the status of the original singularity (the so-called big bang) from which space emerged is a central one. It may be studied through the gravitational waves that are produced immediately after the big bang, for example during an explosive phase of expansion, known as inflation, that follows immediately the big bang.

The study of the expansion of the Universe has recently shown an unexpected acceleration in the more recent stages of the evolution, which is attributed to a new form of energy, known as dark energy. Understanding the nature of dark energy and the fate of the Universe is another fundamental question.

Although these scientific questions are specific to the understanding of the early Universe and its subsequent evolution, the methods used have far reaching applications. For example, in the context of the Space Campus, teams from IPGP and APC realized that they are using similar methods to analyze seismic data on the Moon surface and fluctuations in the Cosmic Microwave Background (CMB): in both cases fluctuations on a sphere. Also, cosmology requires processing larger and larger amounts of data. For example, the 10 year observations by the LSST telescope (performing large sky surveys to understand the properties of dark energy) will require a database of 60 PetaBytes of raw data. Processing such a vast amount of data is a challenge which will position the field in a very central place for the treatment of massive amounts of data.

We have identified a certain number of axes:

1. Support of the Paris Centre for Cosmological Physics

All the fundamental questions listed above are addressed by the Paris Centre for Cosmological Physics (PCCP: http://www.pariscosmo.fr). We stressed earlier that the goals of PCCP are very similar to those advertised by the LabEx, although the PCCP is more focussed thematically but has a larger laboratory base. The LabEx supports the Centre by providing one postdoc position (PCCP fellow) every year, as well as financing the visits of scientists with a high international visibility through a special UnivEarthS-PCCP programme.

2. B-mode polarization of the Cosmic Microwave Background

The contribution of the Labex has been crucial to structure team for preparing the forthcoming years in these fields with an emphasis to consolidate a major contributor of the French community to three CMB projects : QUBIC (as the vector of a new instrumental concept – the bolometric interferometry), LiteBIRD ( the only CMB-B polarisation Space project supported by an space agency) and POLARBEAR/Simons Observatory (for which we are building the main French participation to the US CMB-S4 program).

The measurement of B polarization modes of the Cosmic Microwave Background (CMB) may provide a direct probe of primordial gravitational waves produced during the inflationary epoch. Measuring precisely the polarization of the CMB is thus the next exciting frontier. Its characterization was improved by the Planck satellite mission (launched May 14, 2009). The weakness of the B-mode signal requires the development of highly sensitive experiment with an exquisite control of systematic errors. Most of the experiments or projects dedicated to the quest are based on the well known direct imaging technology. While imagers measure maps of the CMB, interferometers directly measure Fourier components of the Stokes parameters and thus are expected to be less sensitive to systematic effects. Unfortunately, the classical heterodyne interferometry concept may have reached its limits in term of scale and sensitivity. However, Bolometric Interferometry could combine the advantages of interferometry in terms of systematic effects handling and those of direct detectors in terms of sensitivity.

Although many experiments are already proposed in the USA (ground based and balloon borned), only one project has emerged in Europe, the QUBIC program supported by a French-Italian-USA-UK-Irish collaboration (http://www.qubic.org). The APC CMB team including its experimental laboratory is leading this research effort with particular interest in:

- Conception and design of the QUBIC instrument

- Data analysis and simulations

- Development of the detection chain:

- Bolometer arrays based on the Transition Edge Sensors (TES) technology and multiplexed readout.

- Kinetic Inductance Detectors (KIDs), a new path towards large detector arrays: this new detection technique uses the variation of kinetic inductance of a superconductor when it absorbs a photon flux. Their advantages are the following: (i) they are relatively simple to fabricate, (ii) the readout electronics is inherently multiplexed allowing for a large number of detectors (of the order of 1000 or more) to be readout with a single wire and (iii) the intrinsic sensitivity could theoretically be very high. We propose to couple these new detectors with the current developments made for TESs. Within 2 years, a demonstrator of some 100s KIDs will be realized, fully compatible with the QUBIC requirements. The number of detectors will be further improved to reach some thousands of KIDs within 10 years.

- Realization of receiver horns based on the platelets technology.

3. Understanding the nature of Dark Energy

The other sub-work package has a different timeline: it concerns the analysis of data of experiments searching for the nature of dark energy. They are based on large scale surveys which require to store and analyse massive amounts of data. The François Arago Centre, together with the IN2P3 computing centre in Lyons, plays a significant role in this challenging task. The work is to identify what will be the exact need for data storage and processing (2011-2014) and then to participate in setting up an international centre for dark energy, as already planned in the US by the LSST collaboration (2015-2020).

Progress in this field is expected both on the theoretical and observational sides.

On the theoretical side, alternate models of dark energy are examined along with possible large distance modifications of gravity. Both directions should be followed, given that dark energy is currently only known through its gravitational effects. Hence, observations leading to infer its existence can also be explained instead by changes in the gravitational laws at cosmological distances. There are plenty of models which replace a simple cosmological constant by a new more or less exotic dark content of the Universe, but no fully consistent model of large distance modification of gravity is known.The APC and LUTh theory goups are involved in both directions, with a special expertise on the study of large distance modifications of gravity at APC. New proposals along this line have been made by APC theorists and are under examination.

From an observational point of view, understanding dark energy requires more accurate characterization of the properties of this dark energy, and tests of standard general relativity. Two complementary families of measurements should be pursued, since they allow to differentiate modified gravity from sensu stricto dark energy: measurements of the expansion rate of the Universe and its evolution, and measurement of the growth of structures, whose rate is slowed down by dark energy.

For each of these measurements, several complementary techniques should also be used : the exquisite required precision of these observations requires to carefully control degeneracies and systematic effects, which will affect different probes in different ways.

APC is involved in several short and long term observational projects using several dark energy characterization techniques, through its wide field astronomy group. It is also participating in the Planck project (see above) and will be able to use CMB data in correlation with other wide field surveys.On the longer term, APC is focusing on cosmic magnification, another way to exploit gravitational lensing than the more common shear measurement — this technique is very well adapted to the depth of future surveys. The preparation of future analyses is currently done on SDSS data, and APC plans to apply this technique to LSST and Euclid data. LSST (Large Synoptic Survey Telescope) is a telescope with a 8.4 m diameter main mirror, which should get its first scientific images in 2019. It is located on top of Cerro Pachón in Chile, which already houses the Gemini South telescope. The camera has a mosaic of 200 4k x 4k CCDs, totaling 3.2 billion pixels, with a field of view of ten square degrees.

This project led by Anthony Tyson from the University of California, Davis has been ranked by the Astronomy and Astrophysics Decadal Survey “New Worlds and New Horizons in Astronomy and Astrophysics,” as its top priority for the next large ground-based astronomical facility. Euclid, a space based project, is proposed by a European consortium (led by Alexandre Refregier from CEA/IRFU/SAp) and was selected by ESA in October 2011 (see the Euclid ESA page). Its launch is planned for 2021.

Both experiments should be in operation around 2019-2021. APC is already strongly involved in their preparation — camera control software and photometric calibration for LSST, and most importantly, data processing for both LSST and Euclid ground segment. Euclid is a space project that relies on ground-based data to fully exploit its science data: LSST data will be an important asset in Euclid’s science exploitation, and APC will be in charge of merging LSST and Euclid data. Since LSST data will represent tens of petabytes, the data processing will heavily rely on computing resources and staff at CC-IN2P3 and François Arago Centre (FACe).

-

Position Name Laboratory Grade, employer WP leader Giraud-Héraud, Yannick APC DR – CNRS WP co-leader Piat, Michel APC Professor – Université Paris Diderot WP co-leader Hamilton, Jean-Christophe APC DR – CNRS WP co-leader Aubourg, Eric APC CEA WP co-leader Langlois, David APC DR – CNRS WP member Bartlett, James G. APC Professor – Université Paris Diderot WP member Ganga, Ken APC DR – CNRS WP member Bucher, Martin APC DR – CNRS WP member Stompor, Radek APC DR – CNRS WP member Patanchon, Guillaume APC MCF – Université Paris Diderot WP member Errard, Josquin APC CR – CNRS WP member Grandsire, Laurent APC IR1 – CNRS WP member Bui Van Tuan APC PhD – Sorbonne Paris Cité – ED560 WP member Smoot, George APC/PCCP Professor – Université Paris Diderot/USPC WP member Prêle, Damien APC IR2 – CNRS WP member Salatino, Mario APC PostDoc WP member Vergès, Clara APC PhD – Sorbonne Paris Cité – ED560 WP member El Bouhargani, Hamza APC PhD – Sorbonne Paris Cité – ED560 WP member Murray, Calum APC PhD – Sorbonne Paris Cité – ED560 WP member Beck, Dominic APC PhD – Sorbonne Paris Cité – ED560 WP member Doux, Cyrille APC PostDoc WP member Hoang Duc Thuong APC PhD – Sorbonne Paris Cité – ED 560 -

.

QUBIC:

QUBIC, as a bolometric interferometer, is anticipated to offer an unprecedented level of control of systematics thanks to the possibility of performing self-calibration. However, this clear advantage comes at the cost of a more complicated data analysis than with classical imagers: the synthesized beam is very structured (multiply peaked, frequency dependent with non-Gaussian beam-shapes) so that the map-making process needs to be based on specific algorithms. This important issues was solved in the past three years by Pierre Chanial, thanks to the funding from the Labex Univ’Earths. He has developed a pipeline using High-Performance Parallel Computing to enable the implementation of a realistic simulator of QUBIC Time-Ordered-Data using multiple convolutions of the input sky

and time-ordered-data, accounting for realistic instrumental configurations (including 1/f noise). This “Simulator” is then used in an Inverse-Problem approach in order to minimize the residuals between synthetic Time-Ordered-Data and the real ones in order to converge towards the maximum-Likelihood sky maps. Thanks to the work of F. Incardona (supervised by P. Chanial and also funded by the Labex) this work has been extended to large-bandwidth and thanks to the work of N. Krachmalnicoff, realistic dust foregrounds are now included in the sky model. We also have developed computer-efficient codes to estimate the power spectra using various techniques (Pseudo-Spectra using Xpol [Hinshaw et al. 2003, Tristam 2005], XPure [Grain et al. 2009] methods, maximum-likelihood estimates) and have been able to show that the anticipated sensitivity of QUBIC (using analytical estimations [Battistelli et al.2008]), σ(r)=0.01 in two years of data, was indeed achieved using a full End-To-End simulation pipeline assuming that the noise has the expected characteristics from the site where QUBIC will be installed in 2018 in the Puna plateau in Argentina, next to the town of San Antonio de los Cobres. Such simulations were decisive in withing the QUBIC Technical Design Report [arXiv:1609.04372].

This set of software is now the basis of the QUBIC Simulation and Data Analysis Pipeline which is currently being developed. We insist on the fact that this work was only made possible by the support from the Labex Univ’Earths (salaries of P. Chanial and support for the stay of F. Incardona).In 2017 and 2018, the main contribution of the labex WP F2 to QUBIC has been targeted, with the arrival of Maria Salatino (post-doc funded by the labex) and Steve Torchinsky (IR1, Observatoire de Paris), in the project, the development of scripts for data acquisition and analysis of the TESes arrays and the improvement of the laboratory testbed. She elaborated a set of scripts to automatically execute the data analysis and charaterize the TES arrays. For example, With Steven Torchinsky, she developed a script to automatically acquire IVs at different temperature (temperature ramp). Given the required thermalization time (30min), necessary between each temperature step to assure having the detectors at the set point temperature, and given the degree of freedom in the TES heat transport equation at least seven-eight different temperatures are necessary. This script enables the overnight IVs acquisition, leaving the business hours free for executing other kind of tests and reducing by 50 % the required time for characterizing TESes arrays. During the same the APC team continued to improve the read-out electronics system. “ Time Domain Multiplexing” readout is commonly affected by aliasing noise due to noise above Nyquist frequency leaking into the readout bandwidth frequency. This noise can be reduced routing each TES signal to a suitable inductor beabove the readout Nyquist frequency. Given the dimensions of test inductors (about 6mm times 6mm), they cannot

be integrated in the already existing SQUIDs boxes ; thereforem a suitable Printed Circuit Board (PCB) has to be developed.The PCB has to satisfy strict mechanical constraints in the 1K readout stage as well as electronics ones (i.e. avoiding introducing parasitic resistances). The design of this custom PCB meeting all the requirements is finished and it will be sent soon to a suitable company for production and we would like to mention that, in 2017, as APC is the PI laboratory of QUBIC, four collaboration meetings have been organized in 2017 at Paris Diderot University :

April 20-21, June 22, September 21 and November 28.

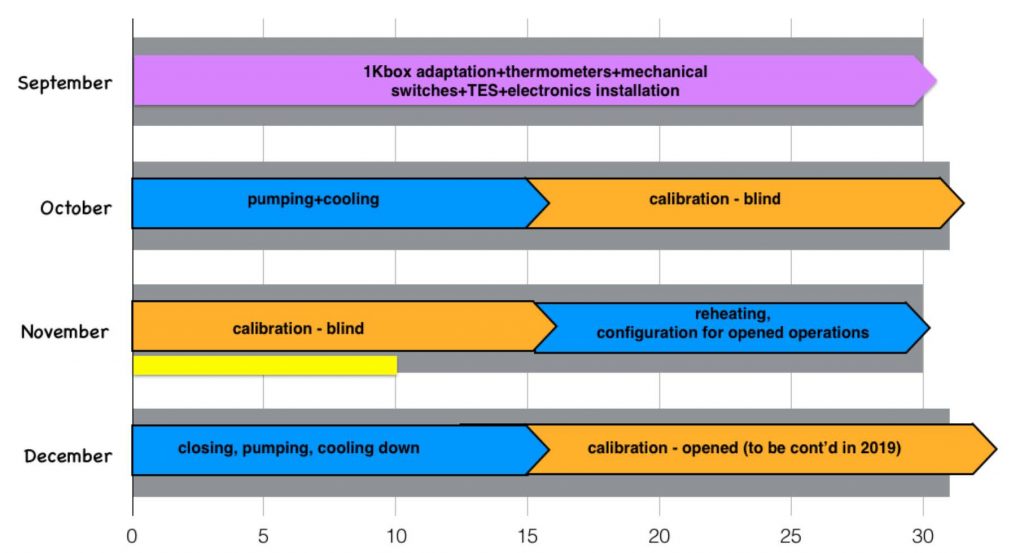

More specificly, in 2018 there has been a breakthrough concerning the development of the QUBIC technonogical demonstrator (TD) which will be installed in Argentina in the beginning of 2019. The tentative schedule of this implementation is given in figure 1. Today a large effort has been made and the TD equipped with one TES array (see

figure 2) is going to be could down beginning of November 2018 in the APC hall.

Figure 1: QUBIC Technological Demonstrator schedule for installation in ArgentinaFigure 2: Insertion of 1K cooler in the Technological Demonstrator in the APC hall

During her one-year post-doc funded by the labex Maria Salatino did a lot of work on many subjects related to the mm instrumentation (see below for Simons Observatory and CMB S4 contributions). We would like just to mention :

– QUBIC: room temperature measuments of SQUIDs and TES array. Readout electronics tuning. Study of systematics effects in the QUBIC experiment arising from the HWP: estimate of the sources and amplitude of 2f and 4f signals.

– MKIDs: MKIDs fabrication in Paris Observatory cleanroom. Fabrication of test samples on 2inch Silicon wafers for testing fab recipes. Fabrication of two real devices on 3inch high resistivity Silicon wafer with materials: Al-Au, Au.POLARBEAR/Simons Observatory

In 2017 the POLARBEAR collaboration published new constraints on the CMB B-mode power spectrum on small angular scales. These are based on two observational campaigns and update the previous constraints published in 2014. The new result amounts to nearly 4-sigma detection of the amplitude of the lensing-generated signal. The latest paper was co-coordinated by a former APC student, J. Peloton, and the results are based on the analysis performed with two independent data analysis pipelines, one of which was developed at APC.

We also started working on the analysis of data from the third campaign. This was the first campaign when we observed a relatively large patch of the sky (~1% of the full sky) optimized for a search of the primordial B-mode signal, which is dominant on the large-angular scales. While we do not have sufficient sensitivity to set any competitive limits on the large-scale B-modes yet, the existing data have been analyzed from the perspective of

instrumental effects and instrument overall performance in view of the preparations and optimization of the Simons polarizer, half-wave plate, we are able to recover the large angular signal with only a minor loss of precision. This is an important indication that the imagining experiments, like POLARBEAR, can be capable of setting constraints on

the primordial B-modes. This analysis, involving researchers from APC, has been already published. In 2018, we have co-authored an article studying the linear polarization signal coming from high-altitude icy clouds, which are potentially detectable by ground-based CMB observatories. We are now working on this data set focusing on estimation of the gravitational lensing potential and demonstrating the so-called delensing procedure. This work is co-led by a student at APC, Dominic Beck. Another PhD student, Clara Vergès, who started in the Fall 2017, is currently performing characterizations and simulations of the instrumental systematic effects in the context of the Simons Observatory.The Labex funds in 2018 were instrumental in helping us to participate to collaboration meetings of the Simons Observatory. Simons Observatory is a continuation of the POLARBEAR/SImons Array project and a pathfinder for the next, ‘ultimate’ generation of the large-scale, ground-based CMB experiments, and is expected to be deployed in

early 2020. Later, it is planned to be included as part of the ‘ultimate’ observatory, CMB-Stage IV, which is currently discussed in the US. Our group at APC is involved in the planning and optimization of the Simons Observatory instruments, which feature unprecedented in the CMB field sizes and complexity. We are responsible for the

definition of observational frequency bands and their sensitivities in regard to the experiment’s capability to detect primordial B-mode signal. We are also coordinating the effort of defining the data management structure and data analysis pipeline. In 2017 the preliminary baseline design was proposed, which went under thorough tests and

verifications. We were coordinating and leading aspects of this global effort, which has led to a keystone, white paper for the collaboration called, “The Simons Observatory: Science goals and forecasts”, which has been submitted for publication considerations.

Labex co-funded four trips (Radek Stompor, Josquin Errard, Dominic Beck, Clara Vergès) to participate in a yearly Simons Observatory meeting, in June 2018. This allowed us to report on the work we had done at APC, contribute to a number of discussion panels and groups and coordinate some of them. It has helped establishing APC as a serious

and impactful member of the entire collaboration. R. Stompor and J. Errard are now advisor and co-pipeline lead for the analysis of the large-aperture telescopes, aiming at the detection of primordial B-modes. R. Stompor is a member of Simon Observatory Theory and Analysis as well as Data Management Committees which coordinate the

analysis organization and pipeline development on the project level.We also used Labex funds for a trip to Grenoble, to present and discuss potential involvement of French teams in the Simons Observatory project. The Grenoble expertise on CMB instrumentation and operation of large aperture telescopes could be of great interest for the observational cosmology in Grenoble and France, and for the

advancement of the Simons Observatory project.LiteBIRD

LiteBIRD is a Japan-led, satellite mission focused on detecting primordial B-mode signal and verifying the inflationary paradigm. The mission is undergoing a phase-A study in Japan and has successfully concluded such a study in the US.

Researchers from APC have been involved in LiteBIRD since 2015 either as full-team members or external collaborators. They work on addressing two key issues of the satellite design: the choice of frequency bands and their sensitivities and their impact on the satellite performance, and impact and mitigation of some selected instrumental systematics. In both these areas we play coordinating and leading roles. In 2017 we initiated an establishment of the LiteBIRD-France collaboration, what resulted in a preparation and a submission in Sept 2017 of a mission-of-opportunity proposal to CNES.This successfully led to the start of a Phase-A at CNES in 2018. The LiteBIRD-France collaboration currently involves 35+ researchers from 9 institutes in France and APC is one of the coordinators of this on-going effort. We also are a driving force behind the European level effort, which is now in the process of organization, aiming at preparation of a proposal to ESA.The Labex contribution to this kind of project is decisive to build the laboratory contribution, it was key in helping us to co-coordinate the effort aiming at defining a European level contribution to LiteBIRD. The Labex funds were used to cover expenses of a participation of R. Stompor and M. Bucher in the second European LiteBIRD meeting in Turin, Italy (February 8-9 2018), as well as J. Errard and G. Patanchon in a hands-on meeting at Munich, Germany (April 24-27 2018). These two meetings were essential for the development of the LiteBIRD CMB satellite project, in particular with the selection of the mission at the end of 2018 by the JAXA, the Japanese Space Agency. Our presence there was necessary as we are currently committed in the delivering of crucial parts of the mission -namely science forecast, optimization of the instrument design and project organization.

Organized as hands-on meetings, these gathering of the European collaborators on the LiteBIRD project, as well as key Japanese members of the collaboration are crucial to foster international collaborations on various studies, from instrumental to software development. R. Stompor, M. Bucher and G. Patanchon have key positions within the

collaboration structure and actively contribute to the preparation of data processing and science exploitation within the collaboration. R. Stompor is a French representative in the European Steering Committee of LiteBIRD, and G. Patanchon – a European coordinator of the LiteBIRD’s Joint Study Group. J. Errard is leading a development and an exploitation of the component separation tools used to forecast the scientific performance of LiteBIRD.Joint analyses of cosmological probes

In 2017, we just opened this new field of research despite what we were planning to do. However we can already emphasize a participation to the LSST DESC meeting at Stony Brook University (NY) from July 10 to July 15, 2017. For the team who attended this meeting with the support of the labex (Eric Aubourg, Cyrille Doux and Cécile Roucelle)

the particular interest was to 1) discuss the work developped by Cyrille Doux during his PhD (Doux, C. et al. Cosmological constraints from a joint analysis of cosmic microwave background and large-scale structure. arXiv.org 1706, arXiv:1706.04583 (2017)) with collaborators in the Theory and Joint Probes (TJP) working group, 2) to discuss further

involvement in this working group on forecast studies and 3) discuss ideas with other LSST members for a new, related project on the combination of ground- and space-based weak lensing surveys, e.g. with LSST and Euclid. This effort aims at building this new direction was pursued in 2018.CMB-S4

The next generation of ground-based CMB experiments, CMB Stage 4 (CMB-S4), is a massive undertaking to deploy of order 500,000 detectors on the sky to increase sensitivity by at least an order of magnitude. Using a suite of small and large aperture telescopes distributed between the South Pole and the Atacama Desert in Chile, the primary science goals are to search for primordial gravity waves, through the polarized B-mode CMB anisotropy signal, and to constrain the number of light particle species produced in the early universe, by measuring the effective number of degrees-of-freedom. Additional science covers a vast range of subjects ranging from neutrino mass, dark energy and modified gravity to structure and galaxy formation through; much of this science is unique thanks to CMB-S4s ability to simultaneously trace dark matter and ionized gas out to high redshift through gravitational lensing of the CMB and the Sunyaev-Zeldovich (SZ) effects, respectively. Surveying 40% of the southern sky starting in the mid 2020s, CMB-S4 will be the millimeter-wave complement to the Large Synoptic Survey Telescope (LSST) and the Euclid and WFIRST space missions. This will open up the entirely new science area of cross-correlations between cosmolo-gical observables, such as CMB lensing, SZ effect and galaxy catalogs.

With it’s long-standing expertise in CMB science and strong implication in Euclid and LSST, the APC is fully engaged in all scientific aspects of CMB-S4. The U.S. Department of Energy (DoE) and the National Science Foundation (NSF) recently mandated the Conceptual Design Task Force (CDT) to propose a straw-person concept, and their report will appear in late October 2017, kicking off a series of agency reviews and procedures. The entire U.S. CMB community is pooling its experience to make CMB-S4 happen, with major national labs preparing to participate in its development, construction and operation. Most importantly for us, international participation is actively sought.

Thanks to the LabEx, in 2018, Ken Ganga participated in the CMB-Stage 4 meeting in Princeton in September of this year (one of the two CMB-S4 annual meetings — other commitments prevented him from attending the second).With the help of APPEC, he also organized the fourth in a series of community meetings designed to involve a larger European community in the CMB-Stage 4 project (see https://indico.in2p3.fr/event/17625/overview — while APPEC financed much of the meeting as a whole, LabEx funds were needed specifically for Ken Ganga’s travel). Along the same lines, he was also invited to give a presentation of these European CMB coordination efforts, and the LabEx paid travel costs for, at a CMB conference in October for European coordination for next-generation experiments (http://www.iac.es/congreso/cmbforegrounds18/). The participation of the APC CMB team to explore, prepare and

manage at the European level, its contribution to the future of the CMB experiments on ground was supported by the labex (Ken Ganga, yannick Giraud-Héraud and Radek Stompor)..

.

-

– Takakura et al. Measurements of tropospheric ice clouds with a ground-based CMB polarization experiment, POLARBEAR – eprint arXiv:1809.06556

– Beck, Dominic, Fabbian, Giulio, Errard, Josquin – Lensing reconstruction in post-Born cosmic microwavebackground weak lensing – Physical Review D, Volume 98, Issue 4, id.043512

– The Simons Observatory Collaboration – The Simons Observatory: Science goals and forecasts – eprint arXiv:1808.07445

– Ward, J.T. ; Alonso, D. ; Errard, J. ; Devlin, M.J. ; Hassefield, M. – The Effects of Bandpass Variations on Foreground Removal Forecasts for Future CMB Experiments – The Astrophysical Journal, Volume 861, Issue 2, article id. 82, 9 pp. (2018)

– Doux, Cyrille; Penna-Lima, Mariana; Vitenti, Sandro D. P.; Tréguer, Julien; Aubourg, Eric; Ganga, Ken –

Cosmological constraints from a joint analysis of cosmic microwave background and spectroscopic tracers of the large-scale structure – Monthly Notices of the Royal Astronomical Society, Volume 480, Issue 4, p.5386-5411– Salatino, Maria et al. – Performance of NbSi Transition-Edge Sensors readout with a 128 MUX factor for the QUBIC experiment – SPIE 2018

– Salatino, Maria et al. – Studies of Systematic Uncertainties for Simons Observatory: Polarization Modulator Related Effects’. Salatino M. et al., SPIE 2018

– O’ Sullivan et al. – Simulations and performance of the QUBIC optical beam combiner – SPIE 2018

– Takakura, S. and the POLARBEAR collaboration, Performance of a continuously rotating half-wave plate on the POLARBEAR telescope, Journal of Cosmology and Astroparticle Physics, Issue 05, article id. 008 (2017).

– The POLARBEAR collaboration, A Measurement of the Cosmic Microwave Background B-Mode Polarization Power Spectrum at Sub-Degree Scales from 2 years of POLARBEAR Data, accepted for publication in the Astrophysical Journal, (2017)

– Poletti, D. and the POLARBEAR collaboration, Making maps of cosmic microwave background polarization for B-mode studies: the POLARBEAR example., Astronomy & Astrophysics, Volume 600, id.A60, (2017)

– Matsumura, T. and the LiteBIRD collaboration, LiteBIRD: Mission Overview and Focal Plane Layout., Journal of Low Temperature Physics, Volume 184, Issue 3-4, pp. 824-831, (2016)

Suzuki, A. and the POLARBEAR collaboration, The Polarbear-2 and the Simons Array Experiments. Journal of Low Temperature Physics, Volume 184, Issue 3-4, pp. 805-810, (2016)

– Cyrille Doux et al. Cosmological constraints from a joint analysis of cosmic microwave background and large-scale structure. arXiv.org 1706, arXiv:1706.04583 (2017))

– Duc Thuong Hoang et al. – Bandpass mismatch error for satellite CMB experiments I: Estimating the spurious signal (https://arxiv.org/abs/1706.09486)