Y3 Advanced Gamma-Ray Science Methods and Tools

> Read the articles connected to the project.

-

The goal of this project is to study modern techniques of signal processing from the wider scientific and mathematical community and apply them to the analysis of very-high-energy gamma ray data.

This applies both to the reconstruction of raw data from Cherenkov telescopes, where we strive to lower the energy threshold and improve angular resolution and cosmic-ray rejection, to the realtime detection of transient sources in high-level data.

This study benefits current and future gamma ray instruments, in particular the Cherenkov Telescope Array (CTA) observatory while simultaneously giving visibility to local groups within several large projects.

-

POSITION NAME SURNAME LABORATORY NAME GRADE, EMPLOYER WP leader Karl KOSACK AIM CDI, CEA WP member Jérémie Decock AIM Postdoc, Univ. Paris VII WP member Sandrine Pires CosmoStat CDI, CEA WP member Bruno Khelifi APC CDI, CNRS WP member Fabio Acéro AIM CDI, CNRS WP member Tino Michael AIM Postdoc, ASTERICS WP member Thierry Stolarczyk AIM CDI, CEA WP member Julian Lefaucheur AIM Postdoc, ASTERICS -

The primary objecLve of this project was to build a collaboraLon with other labs to help improve the scientific performance of Imaging Atmospheric (IACTS) like CTA or HESS. In parLcular the major goals/milestones were to:

1. Identify novel signal processing techniques (e.g. those used by the CosmoStat group) that could improve sensitivity in IACTs

2. Apply these algorithms to the air-shower images (low-level data) obtained by an IACT before reconstruction in an atempt to both lower the detectable energy threshold and improve PSF and sensitivity.

3. Evaluate the scientific gain of such a technique after full data processing from raw data to sensitivity curves

4. Release software libraries to the public and publish a technique paper on the subject

5. Apply the technique to real science data from HESS (or preliminary CTA if available)

Goals 1-3 were achieved, goal 4 was partially achieved (software release), and will be completed with a publication, which is in progress with the hope to be released in 2019. Goal 5 is now well within reach, and will be achieved with the first data release from CTA prototype telescopes in the coming year.

Deliverables:

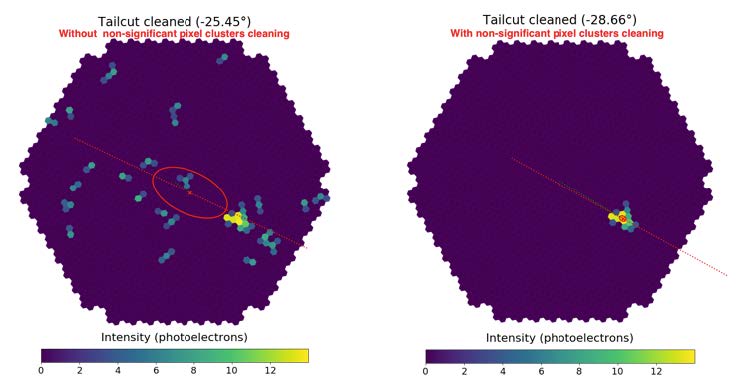

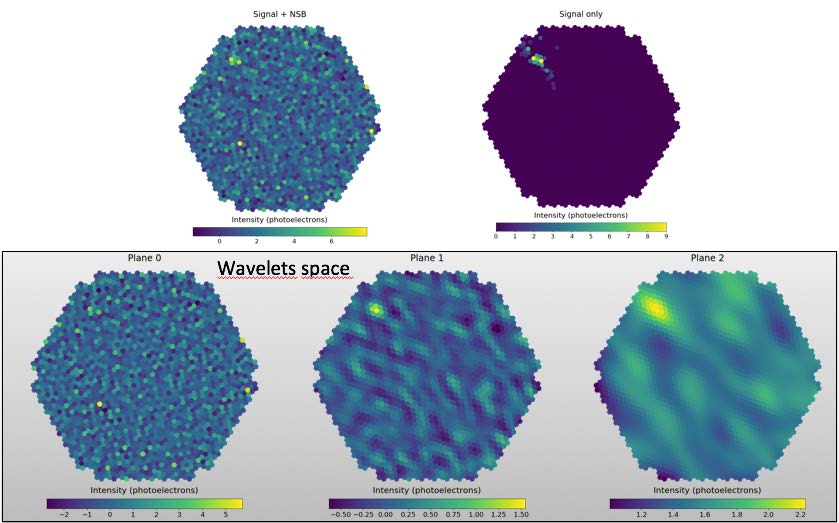

- A wavelet-based denoising algorithm based on techniques from the CosmoStat lab was developed, tested, and applied to realistic IACT data (from the CTA observatory) to atempt to improve performance

- An open-source Python software library PyWI (http://www.pywi.org) was developed by Jeremie Decock that includes the high-level algorithms, documentation, and examples developed in this project. This software is provided as a resource to to the community.

- A full end-to-end data processing chain was produced to analyze IACT data from the raw shower image to the high-level science results (reconstructed event lists and instrumental response funcLons). This work included contribuLons to the CTA prototype data processing framework ctapipe (www.github.com/cta-observatory/ctapipe) and this work will likely be included in the official CTA data processing pipeline, and may soon also be used to process HESS data as well. The development of this chain made it possible to make high-level comparisons of the scientific performance of the image processing techniques used in this project to standard techniques.

Main Scientific Results:

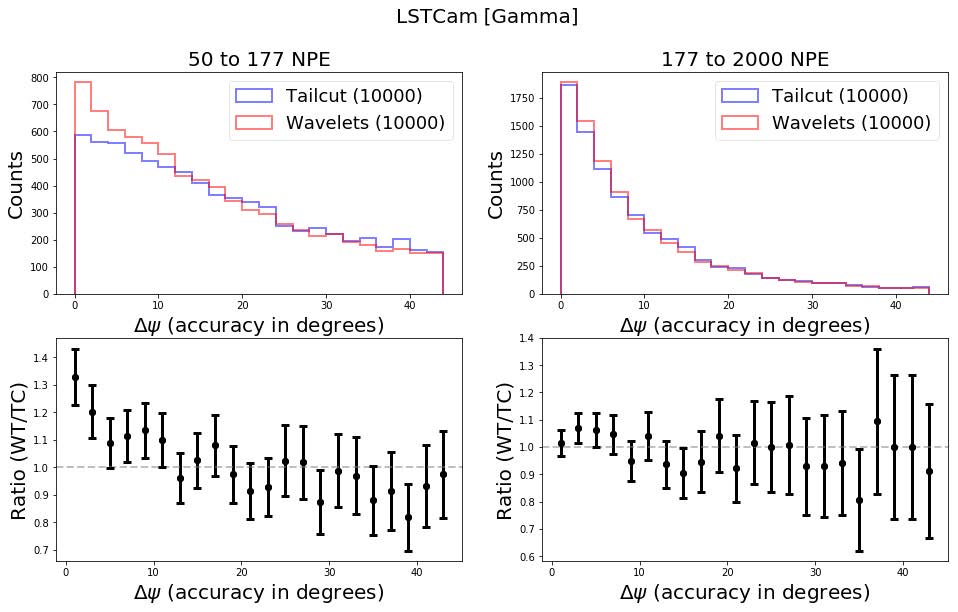

- The wavelet-based denoising achieves a be1er point-spread func,on than the standard analysis at all energies. This can be attributed to better signal extraction and to the improved number of images per shower that are usable for event reconstruction (faint images that sLll retain enough geometrical information to be useful that may be thrown out by standard cleaning techniques).

- Refinement of the technique over the past year has lead to strong improvements in the single-telescope energy resolution, which should be a strong benefit to the overall response of IACTs like CTA. The final end-to-end result combining these techniques plus the full stereo-reconstruction is currently running on the EGI computing grid, and results are to be presented before the end of the year.

The shower direction angular difference after Tailcut and Wavelet cleaning on LST camera for gamma ray shower image. The error bars are due to statistics only. Note that both distributions are obtained from the exact same data sample.

The shower direction angular difference after Tailcut and Wavelet cleaning on LST camera for gamma ray shower image. The error bars are due to statistics only. Note that both distributions are obtained from the exact same data sample.Technical Developments:

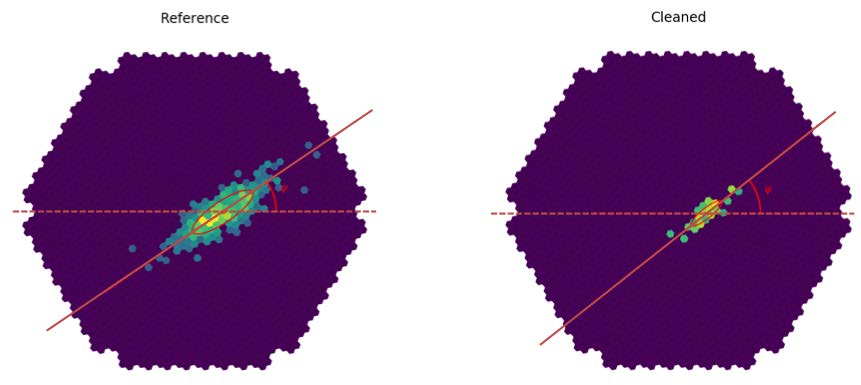

- A set of criteria were to evaluate the low-level performance of the denoising when comparing to a noise-free image, at the pixel and feature levels (e.g. the rotaLon angle of the major axis of the image). This does not take into account higher-level stereoscopic informaLon, which is done in a later step. These criteria were used to tune the algorithm.

- A method to apply the same technique to hexagonal-pixel cameras was also developed by skewing the images into a rectangular geometry with no resampling needed. Four of the six CTA camera types use this geometry, as well as all exisLng IACTs like HESS, MAGIC, and VERITAS, therefore this was needed to process realisLc data. The transformed image can be processed by unmodified wavelet algorithms, and then untransformed back to the original space. Studies showed no strong arLfacts were introduced during this transform. Since the edges of the camera are also no rectangular, the resulLng images are embedded in a rectangular frame and the “missing” pixels outside the camera border are filled in by sampling from a histogram of the true noise distribuLon of the surrounding pixels. This technique helped to avoid edge-effects in the denoising.

- Since the wavelet cleaning parameters and thresholds are dependent on camera geometry and night-sky-background noise level, a so]ware package was created to opLmize the wavelet cleaning parameters using the technique of differenLal evoluLon. The benchmarking developed in the earlier image study was used here as feedback in the opLmizaLon. Using this, opLmizaLon was performed for all CTA cameras and noise levels, and the procedure can be automated and re-run to support further cases, such as the HESS cameras (see future prospects).

Full Reconstruction Chain

Since it is difficult to see the scienLfic impact of these improved techniques only at the image-processing level, a full stereo shower reconstruction algorithm was developed in conjunction with a second post-doc funded by the ASTERICS project. This is needed to evaluate the final performance of the technique and to ensure for example that the image cleaning doesn’t result in background cosmic-rays being indistinguishable from gamma-rays. Close cooperation was made between the two projects to produce a final scientific study and a combined analysis. The stereo reconstruction uses the imaging techniques described above to process shower images, and combines them using a standard stereo plane-intersecLon reconstrucLon, as used in existing IACTs. The incident gamma-ray’s

energy, point-of-origin, and resulting shower development are extracted. Figure 2 – Steps in shower reconstruction

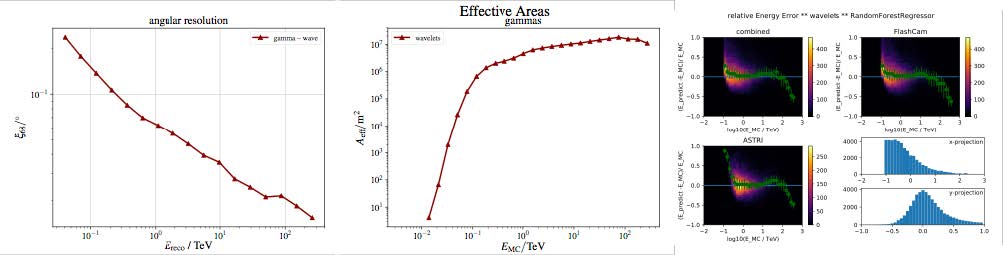

Figure 2 – Steps in shower reconstructionThe same analysis is performed on an ensemble of over 1M gamma-ray (signal) and cosmic-ray (background) simulations to produce high-level instrumental response functions: the effective collection area, point-spread function, and energy dispersion matrix. These are combined to produce a differential sensitivity curve, showing the minimum detectable flux of an astrophysical object as a function of energy. Using this high-level scientific measure, we can then compare the sensitivity of an IACT array with and without the new denoising techniques (in the latter case, the standard technique of 2-level image thresholding known as “picture-boundary cleaning” or “tail-cuts cleaning” was used as a baseline). To distinguish between signal (gammas) and hadrons (cosmic rays), a parameterization of the images and other reconstruction measures are fed into a machine-learning system and trained on monte-carlo simulated showers. In the current implementation, we do not include the wavelet parameters in this discrimination, but will do so in a future development.

This process is extremely CPU intensive, as one needs to analyze millions of simulated showers. For this reason, the analysis is launched on the EGI computing grid (where the necessary simulaLons, totaling around 1 PB of data are stored), and takes approximately 1-2 weeks to produce a final sensiLvity curve for evaluation. The main limiting factor is enough statistics at high energies for cosmic-ray backround events.

Figure 3 – Examples of Instrumental Responses derived using the wavelet cleaning and reconstruction

Figure 3 – Examples of Instrumental Responses derived using the wavelet cleaning and reconstruction -

The work has so far only been presented at a CTA consortium meeting, and a CTA pipelines workshop. This was mainly due to the lack of concrete results until recently. Hopefully the final results will be presented somewhere.